MT3D: A 3D Missing Tooth Detection Framework and Dataset

Motivation

The advancement of fully autonomous robotic dental surgery is limited by a lack of extensive missing tooth datasets. Previous deep learning methods relying on 2D X-ray images are insufficient for robotic surgery, which requires precise 3D localization.

Method

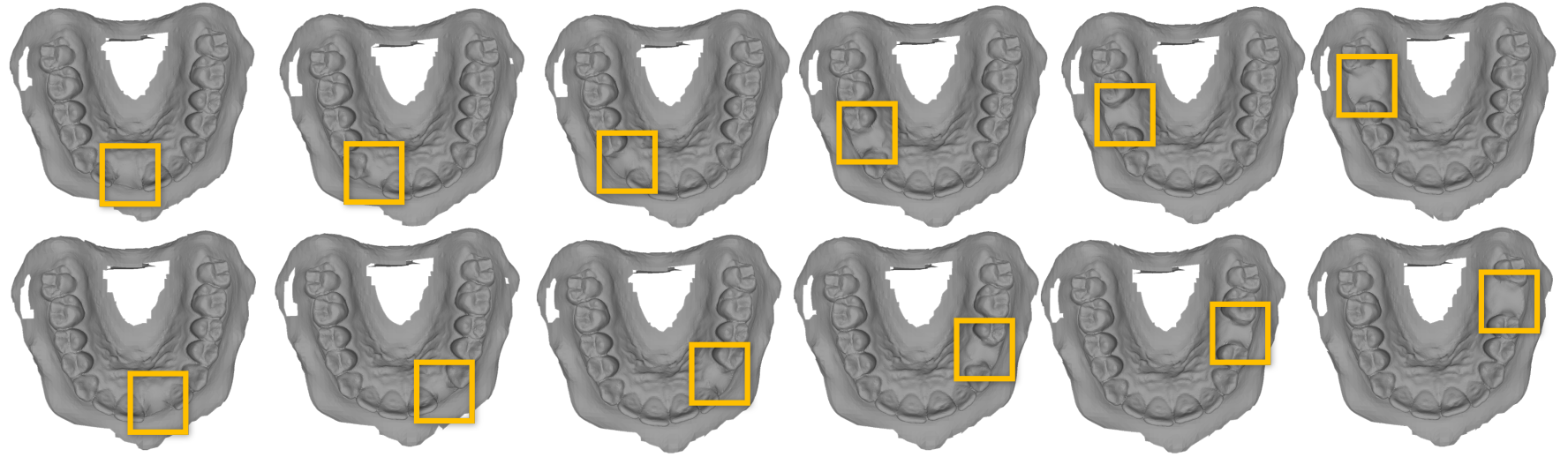

A Synthetic 3D Missing Tooth Dataset (MT3D): The authors created a dataset by taking 175 complete intraoral scans from the public Teeth3DS dataset. From each scan, 12 different tooth loss scenarios were synthetically generated. A pipeline was developed to generate a natural-looking healed-ridge surface and a corresponding 3D bounding box label for the removed tooth.

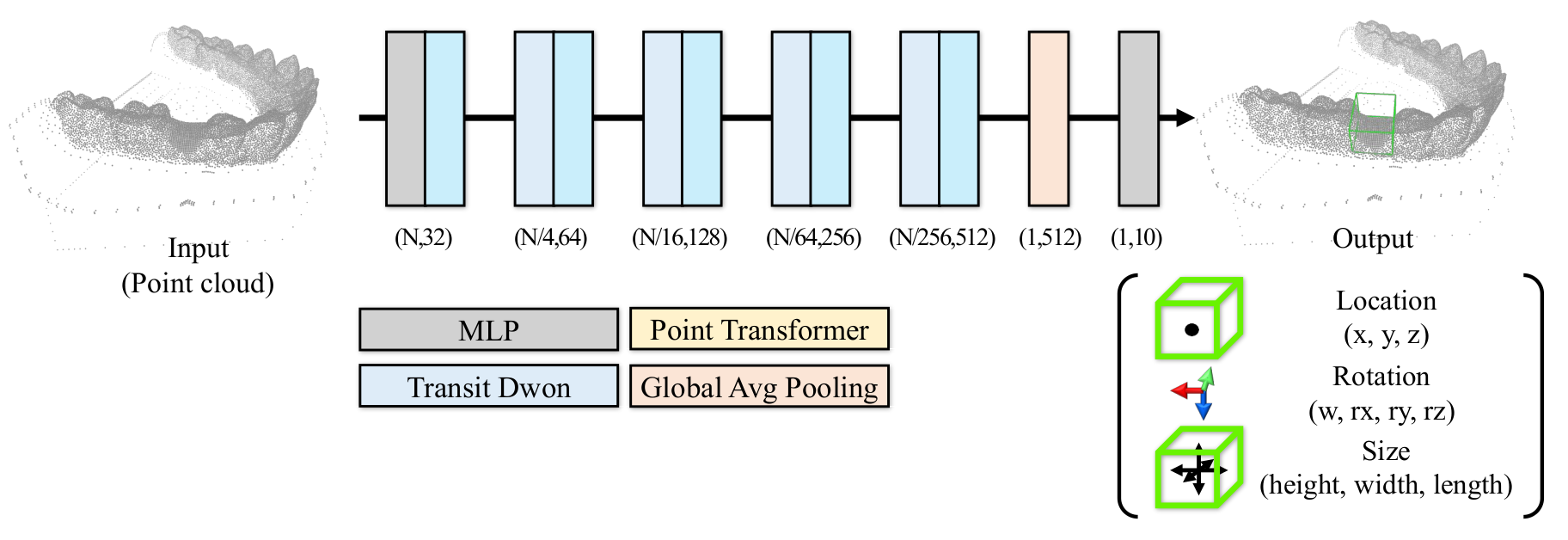

A 3D Detection Framework:

- A model based on the Point Transformer architecture was proposed to predict the 3D bounding box (location, orientation, and size) of a missing tooth directly from a point cloud. The model is trained using a multi-task objective, which includes L1 loss for the box’s center and size, MSE loss for its quaternion orientation, and an auxiliary cosine similarity loss to stabilize rotational training.

Experiments and Results

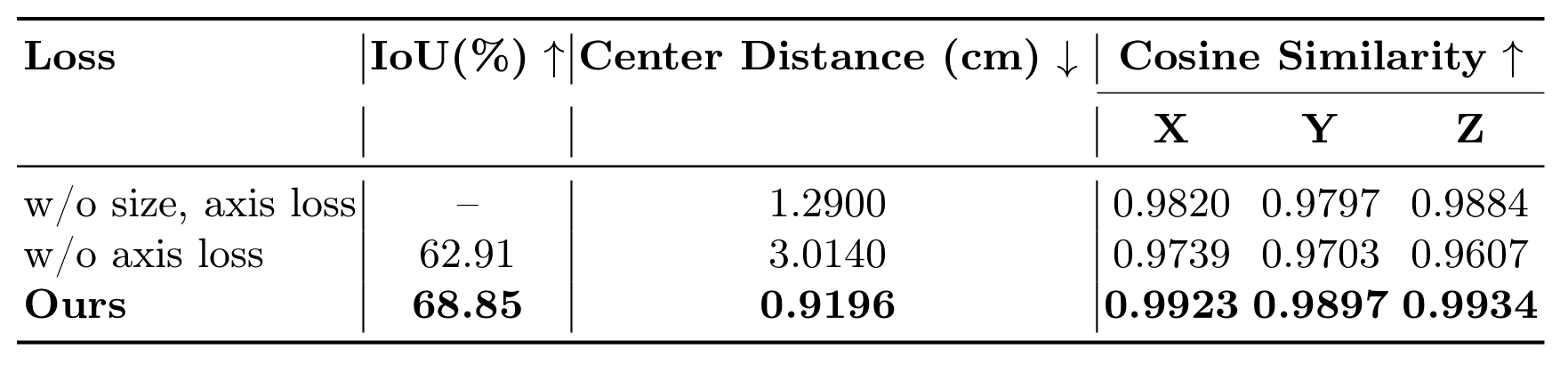

The full model, trained on 4,200 generated samples, achieved the best performance, with a 3D IoU of 68.85%. An ablation study confirmed that an auxiliary loss on the rotational axis was crucial for achieving stable and accurate results.

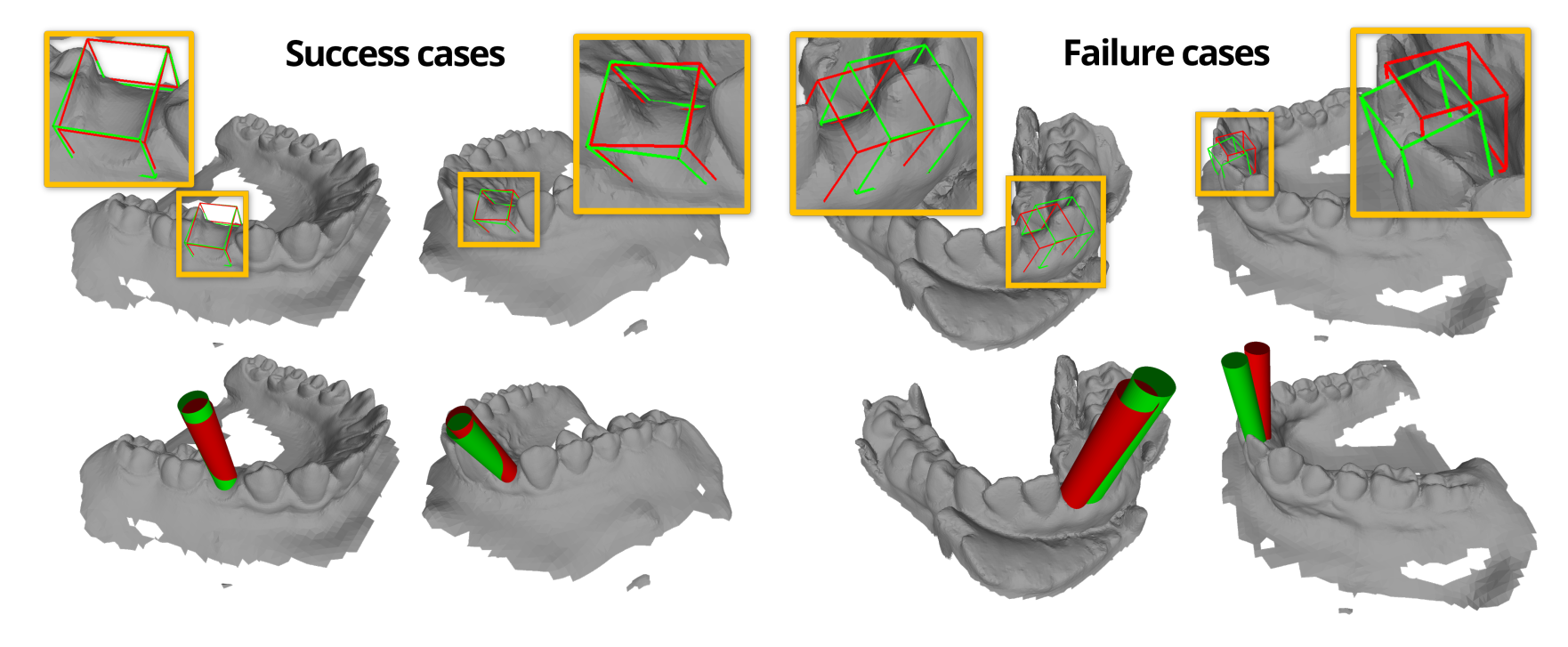

Qualitative results showed high accuracy in molar regions, which are relatively flat. Failures were more common in the anterior (incisor) region, where the surface is narrower and more curved.