MonoFish: Depth-aware Monocular Fisheye 3D Object Detection

Summer Annual Conference of IEIE

Motivation

Why is 3D Object Detection on Fisheye Cameras Difficult?

- Fisheye cameras are widely used in modern vehicles for features like the Surround View Monitor (SVM), but they remain underutilized for 3D perception tasks. This is due to two main challenges:

- Severe Distortion: The significant radial distortion from fisheye lenses makes it difficult to directly apply 3D detection models that are built on the standard pinhole camera model.

- Lack of Datasets: There is a scarcity of public datasets with 3D bounding box labels that are properly aligned for fisheye camera views. While datasets like KITTI-360 include fisheye images, their 3D annotations are not processed for direct use with these views.

Method

Architecture: Depth-Aware Approach

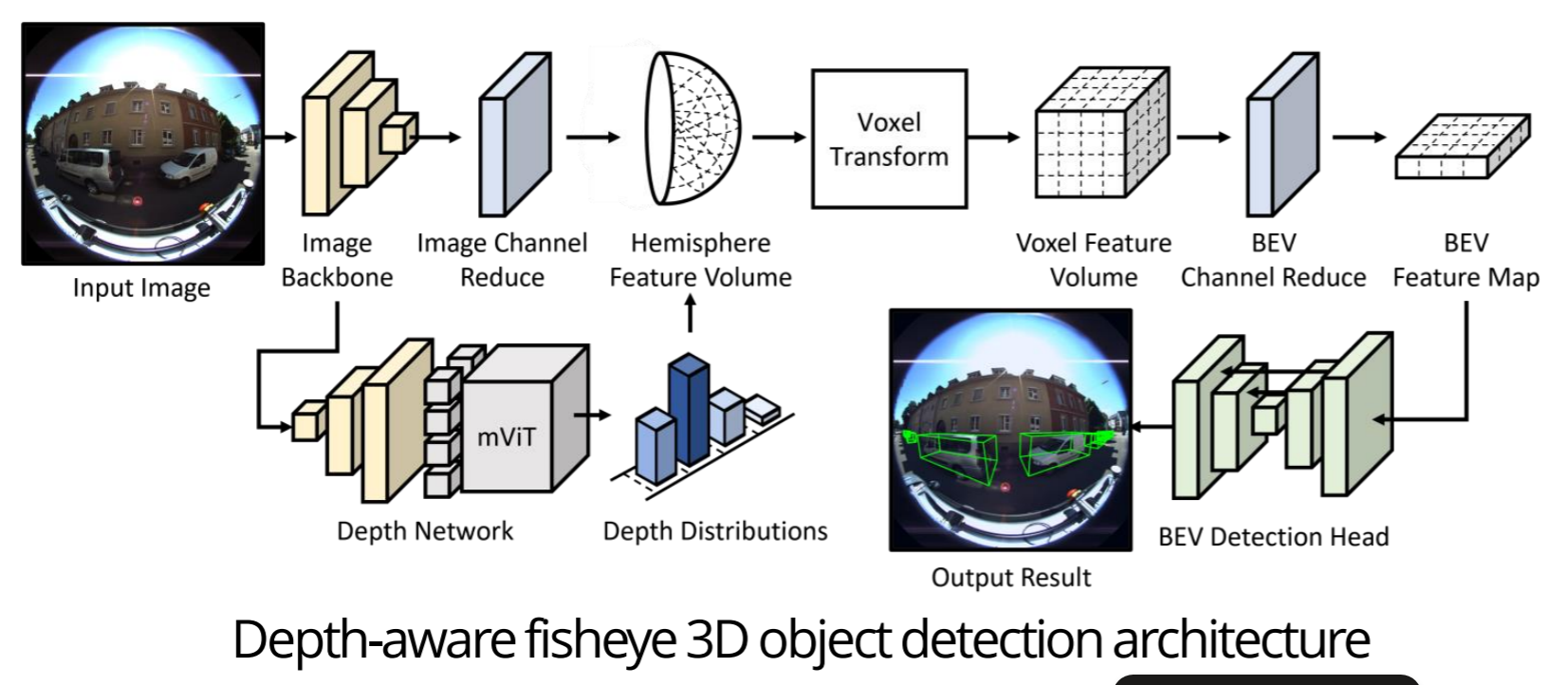

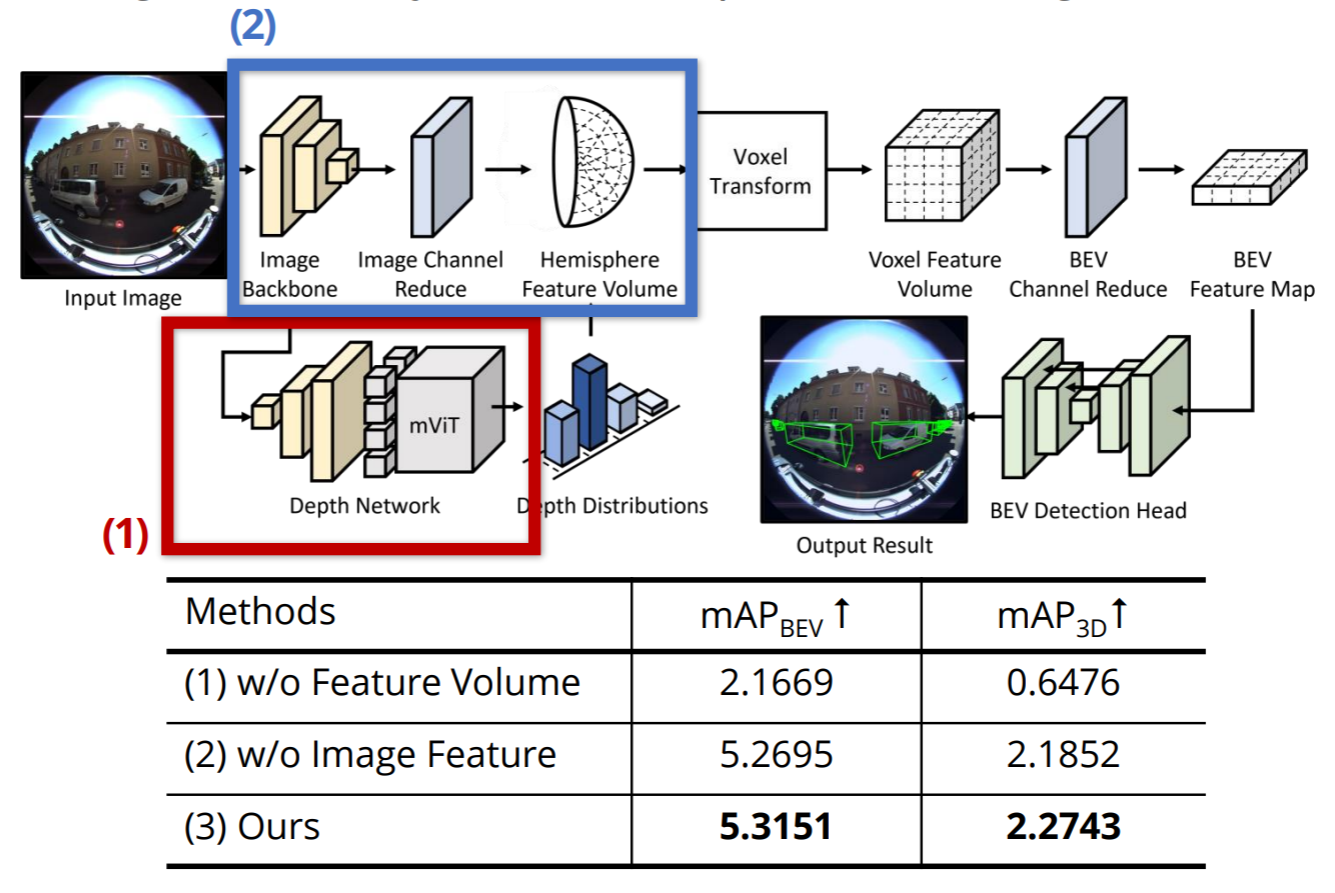

- To address these issues, this research introduces a depth-aware framework for 3D object detection using a single monocular fisheye image.

- Feature Extraction: The model first extracts Image Features using an EfficientNet backbone and predicts Depth Distributions using a pre-trained depth network.

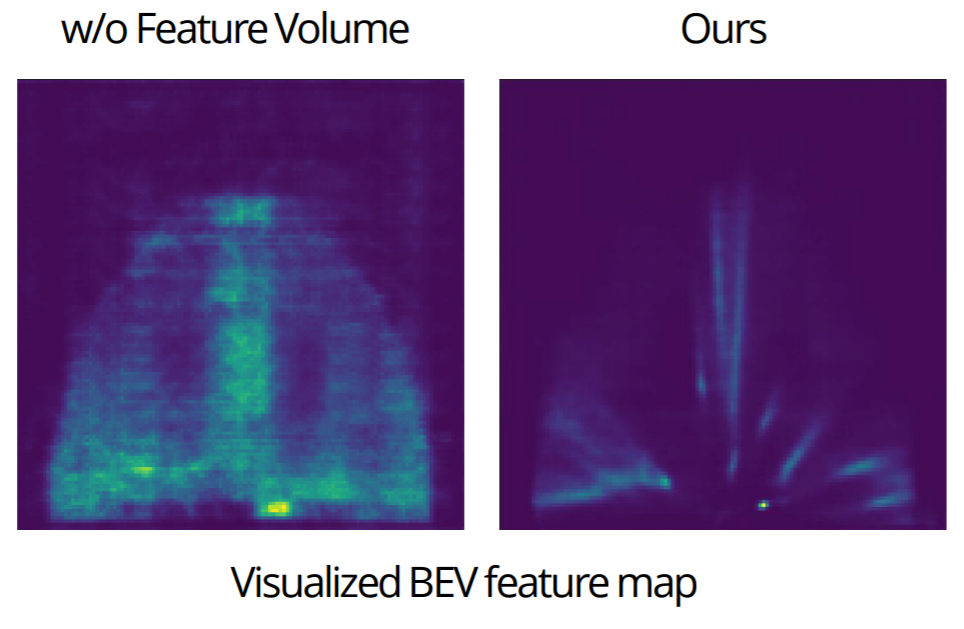

- Hemisphere Feature Volume: These two features are combined to construct a Hemisphere Feature Volume, a representation that preserves the unique geometry of the fisheye view.

- BEV Transformation & Detection: This volume is transformed into 3D world coordinates (voxels) and then projected into a Bird’s-Eye View (BEV) feature map. Finally, a BEV-based detection head predicts the 3D bounding boxes.

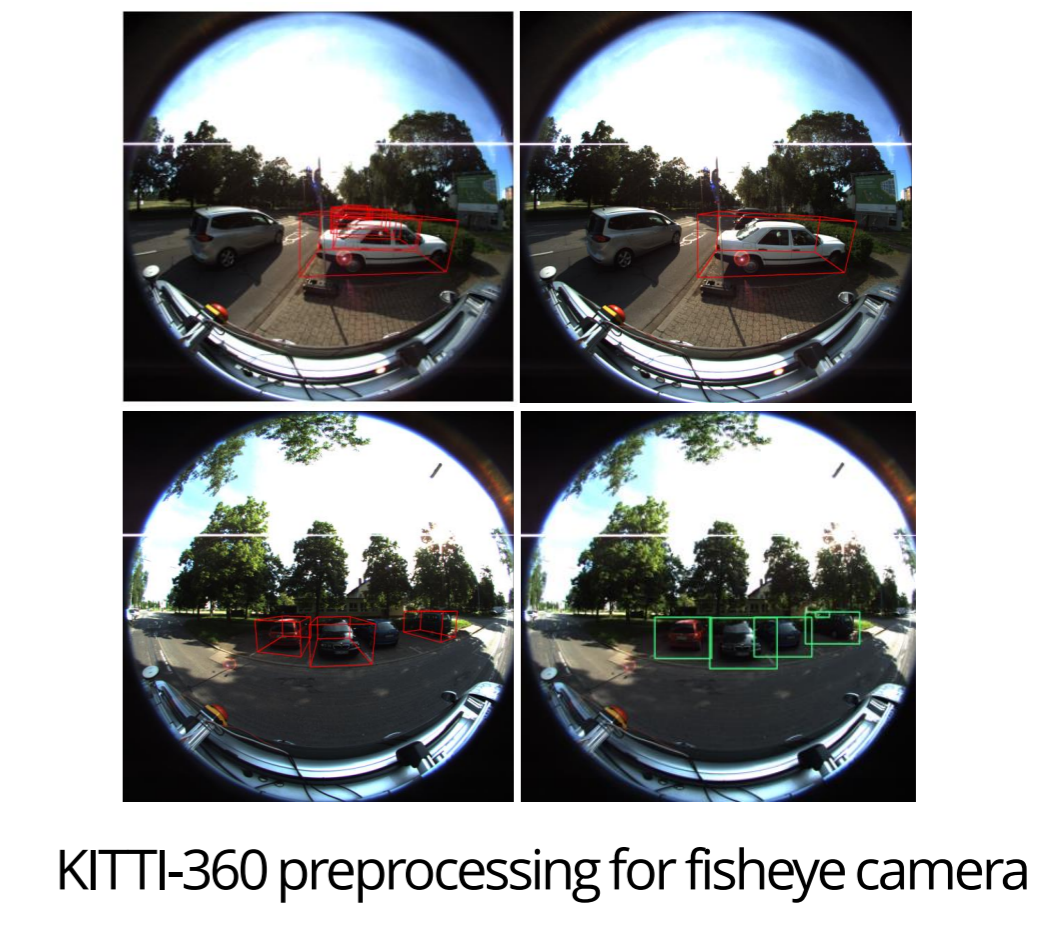

Dataset: Preprocessing KITTI-360

- To train the model with real-world data, the team preprocessed the

- KITTI-360 open-source dataset. They generated new 3D bounding box annotations compatible with the fisheye camera model for training. The labels were carefully filtered based on their distance from the camera and level of occlusion to ensure data quality.

Results

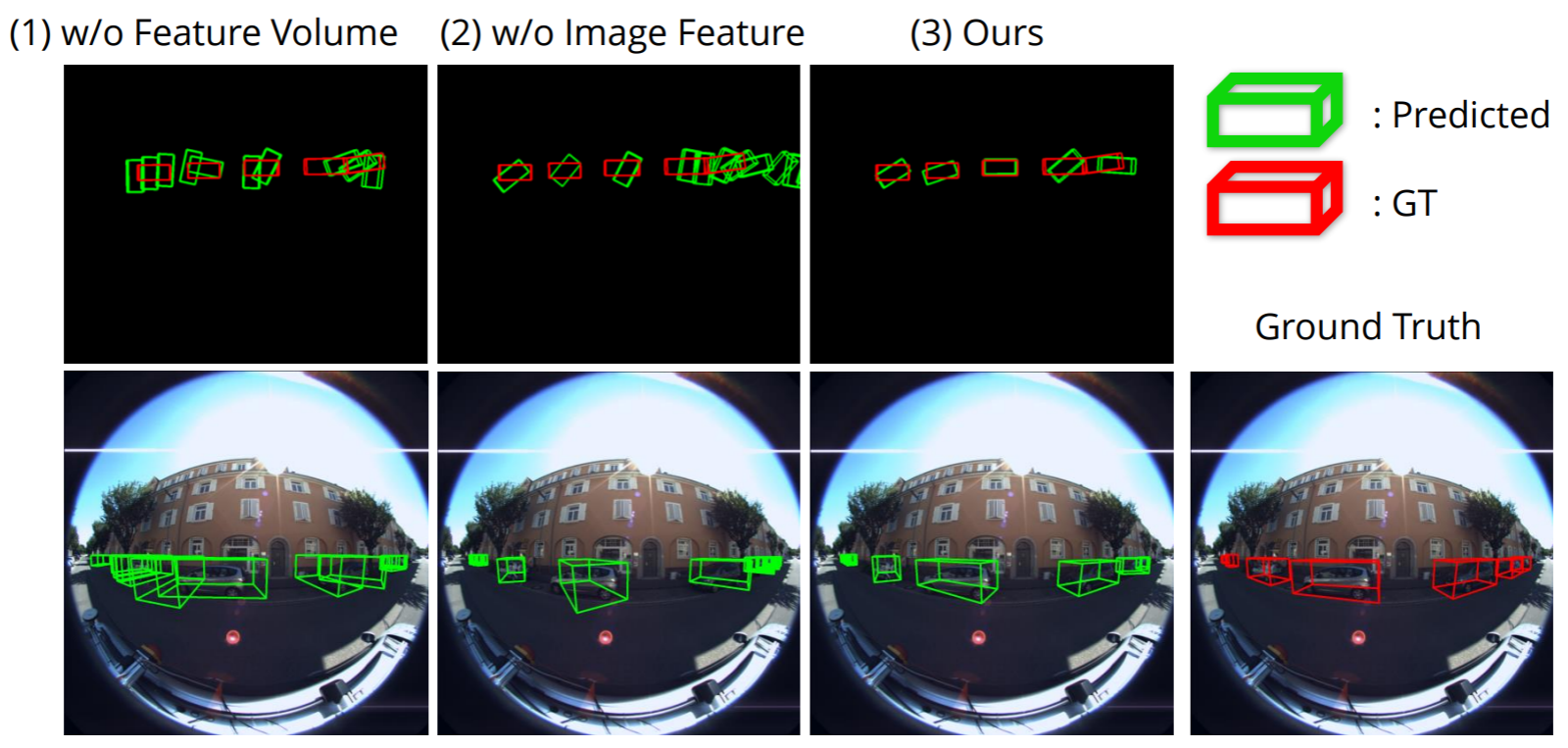

- Quantitative: The complete model achieved the highest accuracy, reaching an mAP 3D of 2.2743. An ablation study confirmed that both the depth-informed Feature Volume and the Image Features are critical components for achieving the best performance.

- Qualitative: Visual results demonstrate that the full model more accurately predicts both the position and orientation of 3D bounding boxes compared to incomplete versions of the model.